Youtube Golf Content: Google's Youtube API

Using Google's Youtube API to collect data from Good Good Golf's Youtube channel.

Introduction

The modern dream: become an influencer. Even better than that, become an influencer that doesn’t have to deal with drama that you see with popular tiktok stars. May I introduce you to my favorite Youtube golf channel: Good Good Golf. Imagine making thousands of dollars just for filming yourself play golf. In this project , we will be finding what it takes to become a successful golf Youtuber. In this post, we are going to be using Google’s Youtube API to collect data from Good Good Golf’s Youtube channel.

Motivation

This project is meaningful to me because I love golf and even started an Instagram account to share my golf experiences jdubsclubs on Instagram . Starting a golf youtube channel is something I have always wanted to do, but I have never had a good understanding of what kind of work is required to maintain a successful channel.

Ethics

After reviewing the Youtube API Terms of Service and Youtube Terms of Service I determined that it was ethical to collect this data, as it is publicly available and I am not using it for any malicious purposes. I also did not find anything that would prevent me from sharing my collected data.

Data Collection

To collect the data, I created a Google Cloud Platform account and created a project. I then created an API key and enabled the Youtube Data API v3. The only dependencies that you need to import for this project are the build function from the googleapiclient.discovery library and the pandas library.

To obtain the data, I made two separate requests from the API. The first I made to collect all of the video IDs of each video ever posted on the Good Good Golf channel. One thing to note is that the API only allows you to make 50 requests at a time. To pull all of the video IDs, I set up a while loop that would continue to make rounds of 50 requests until there are no more pages of results available.

# Set up the API client and specify the YouTube API version

youtube = build("youtube", "v3", developerKey=API_KEY)

# Define the ID of the YouTube channel you want to retrieve videos from

channel_id = "UCfi-mPMOmche6WI-jkvnGXw"

# Define the parameters for the API request

request = youtube.search().list(

part="id",

channelId=channel_id,

maxResults=50

)

# Retrieve the first page of video data

response = request.execute()

# Extract the video IDs from the API response

video_ids = [item['id']['videoId'] for item in response['items'] if item['id']['kind'] == 'youtube#video']

# Check if there are more pages of results available

while 'nextPageToken' in response:

next_page_token = response['nextPageToken']

# Define the parameters for the next API request

request = youtube.search().list(

part="id",

channelId=channel_id,

maxResults=50,

pageToken=next_page_token

)

# Retrieve the next page of video data

response = request.execute()

# Extract the video IDs from the API response

video_ids += [item['id']['videoId'] for item in response['items'] if item['id']['kind'] == 'youtube#video']

The second request I made was to collect the statistics of each video using the video IDs. I decided to collect the following columns of data: date, title, view count, like count, and comment count.

data = []

for i in range(0, len(video_ids), 50):

current_video_ids = video_ids[i:i+50]

#make API request to retrieve video details

video_request = youtube.videos().list(

part="snippet,contentDetails,statistics",

id=",".join(current_video_ids),

maxResults=50

)

response = video_request.execute()

#add the video details to the data list

data.extend(response['items'])

#convert the data list to a pandas dataframe

dat = []

for i in range(0, len(data)):

date = data[i]['snippet']['publishedAt']

title = data[i]['snippet']['title']

duration = data[i]['contentDetails']['duration']

views = data[i]['statistics']['viewCount']

likes = data[i]['statistics']['likeCount']

comments = data[i]['statistics']['commentCount']

dat.append({'date': date, 'title': title, 'duration': duration, 'views': views, 'likes': likes, 'comments': comments})

df = pd.DataFrame(dat)

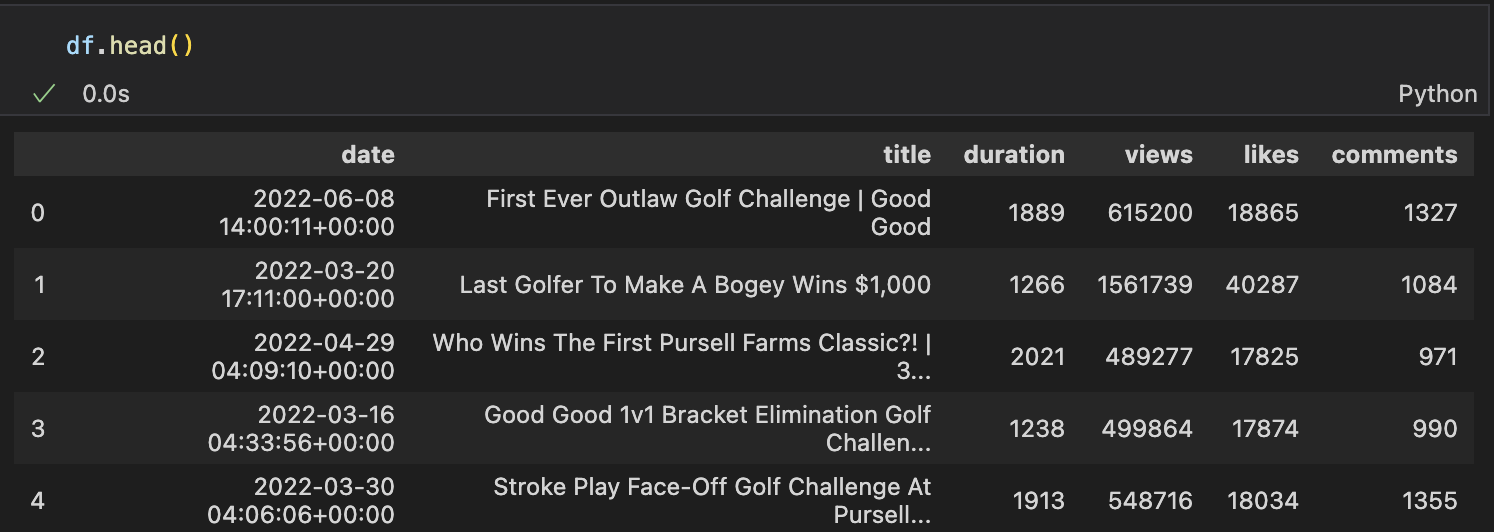

After a quick round of data cleaning, we are left with a dataframe that contains 6 columns of data on 326 videos. The first 5 rows of the dataframe are shown below.

The date column is in form datetime64[ns, UTC]. It was necessary to include the time of day that the video was posted, as there were 36 days that had multiple videos posted.

The title column is the name of the video.

The duration column is in seconds, and has been converted to an integer. That way we don’t have to deal with any decimal points.

The views, likes, and comments columns are all counts of that metric. These columns have also been converted to integers to make them easier to work with.

The full dataframe can be found in My Repository .

Road to Fame

Have you ever wondered what it takes to be a successful Youtuber? Better yet, how about a GOLF Youtuber? I know that everyone and their dog absolutely loves golf with all of their heart and soul (that’s sarcastic), so let’s learn how to become millionaires and hit 1 million subscribers. In my next post I will be analyzing the data I collected to answer these questions.